Apple’s plan to scan iPhone photos for child abuse material is dead

While Apple’s controversial plan to hunt down child sexual abuse material with on-iPhone scanning has been abandoned, the company has other plans in mind to stop it at the source.

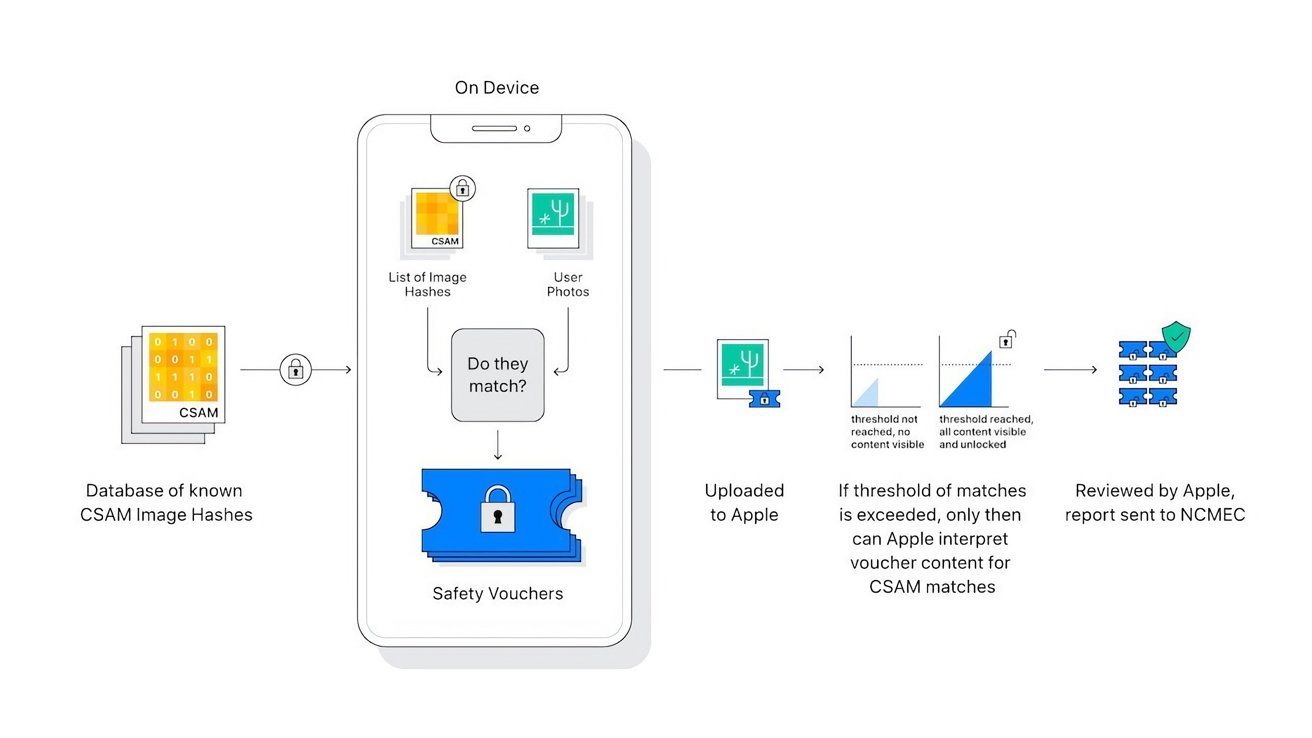

Apple’s proposed CSAM detection feature

Apple’s proposed CSAM detection featureApple announced two initiatives in late 2021 that aimed to protect children from abuse. One, which is already in effect today, would warn minors before sending or receiving photos with nude content. It works using algorithmic detection of nudity and only warns the kids — the parents aren’t notified.